Understanding Regex

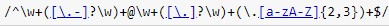

As you're a software developer, you have probably encountered regular expressions many times and got consufed many times with these daunting set of characters grouped together like this:

And you may wondered what this is all about?

Regular Expressions(Regx or RegExp) are too useful in stepping up your algorithm game and this will make you a better problem solver. The structure of Regx can be intimidating at first, but it is very rewarding once you got all the patterns and implement them in your work properly.

What is RegEx and why is it important?

A Regex or we called it as regular expression, it is a type of object will help you out to extract information from any string data by searching through text and find it out what you need.Whether it's punctuation, numbers, letters, or even white spaces, RegEx will allow you to check and match any of the character combination in strings.

For example, suppose you need to match the format of a email addresses or security numbers. You can utilize RegEx to check the pattern inside the text strings and use it to replace another substring.

For instance, a RegEx could tell the program to search for the specific text from the string and then to print out the output accordingly. Expressions can include Text matching, Repetition of words,Branching,pattern-composition.

Python supports RegEx through libraries. In RegEx supports for various things like

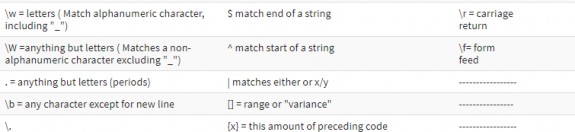

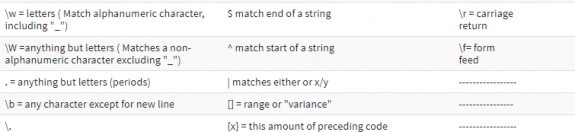

Identifiers, Modifiers, and White Space.

RegEx Syntax

import re

re library in Python is used for string searching and manipulation. We also used it frequently for web scraping.

Example for w+ and ^ Expression

^: Here in this expression matches the start of a string.

w+: This expression matches for the alphanumeric characters from inside the string.

Here, we will give one example of how you can use "w+" and "^" expressions in code. re.findall will cover in next parts,so just focus on the "w+" and "^" expression.

Let's have an example "LiveAdmins13, Data is a new fuel", if we execute the code we will get "LiveAdmins13" as a result.

import re

sent = "LiveAdmins13, Data is a new fuel" r2 = re.findall(r"^\w+",sent)

print(r2)

In [12]:

['LiveAdmins13']

Note: If we remove '+' sign from \w, the output will change and it'll give only first character of the first letter, i.e [i]

Example of \s expression in re.split function

"s:" This expression we use for creating a space in the string.

To understand better this expression we will use the split function in a simple example. In this example, we have to split each words using the "re.split" function and at the same time we have used \s that allows to parse each word in the string seperately.

import re

print((re.split(r'\s','We splited this sentence')))

In [ ]:

['We', 'splited', 'this', 'sentence']

As we can see above we got the output ['We', 'splited', 'this', 'sentence'] but what if we remove ' \ ' from '\s', it will give result like remove 's' from the entire sentences. Let's see in below example.

import re

print((re.split(r's','We splited this sentence')))

In [ ]:

['We ', 'plited thi', ' ', 'entence']

Similarly, there are series of regular expression in Python that you can use in various ways like \d,\D,$,.,\b, etc.

Use RegEx methods

The "re" packages provide several methods to actually perform queries on an input string. We will see different methods which are

re.match() re.search() re.findall()

Note: Based on the RegEx, Python offers two different primitive operations. This match method checks for the match only at the begining of the string while search checks for a match anywhere in the string.

Using re.match()

The match function is used to match the RegEx pattern to string with optional flag. Here, in this "w+" and "\W" will match the words starting from "i" and thereafter ,anything which is not

import re

lists = ['icecream images', 'i immitated', 'inner peace']

for i in lists:

q = re.match("(i\w+)\W(i\w+)", i)

if q: print((q.groups()))

In [ ]:

('icecream', 'images')

Finding Pattern in the text(re.search())

A RegEx is commonly used to search for a pattern in the text. This method takes a RegEx pattern and a string and searches that pattern with the string.

For using re.search() function, you need to import re first. The search() function takes the "pattern" and "text" to scan from our given string and returns the match object when the pattern found or else not match.

import re

pattern = ["playing", "LiveAdmins"] text = "Haroon is playing outside."

for p in pattern:

print("You're looking for '%s' in '%s'" %(p, text), end = ' ')

if re.search(p, text): print('Found match!')

else:

print("no match found!")

In [13]:

You're looking for 'playing' in 'Haroon is playing outside.' Found ma tch!

You're looking for 'LiveAdmins' in 'Haroon is playing outside.' no ma tch found!

In the Above example, we look for two literal strings "playing", "LiveAdmins" and in text string we had taken "Haroon is playing outside.". For "playing" we got the match and in the output we got "Found Match", while for the word "LiveAdmins" we didn't got any match. So,we got no match found for that word.

Using re.findall() for text

We use re.findall() module is when you wnat to iterate over the lines of the file, it'll do like list all the matches in one go. Here in a example, we would like to fetch email address from the list and we want to fetch all emails from the list, we use re.findall() method.

import re

kgf = "khurram@LiveAdmins.ai, haroon@LiveAdmins.ai, saleet@LiveAdmins. emails = re.findall(r'[\w\.-]+@[\w\.-]+', kgf)

for e in emails: print(e)

In [14]:

khurram@LiveAdmins.ai haroon@LiveAdmins.ai saleet@LiveAdmins.ai rehan@LiveAdmins.ai

Python Flags

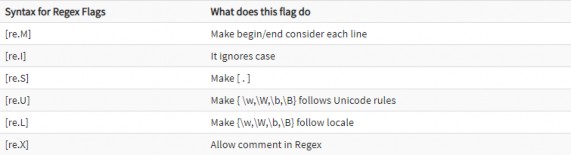

You see many Python RegEx methods and functions take an optional arguemnet flag.This flag can modify the meaning of the given regeEx pattern.

Various flags used in python include.

Let's look the example for re.M or Multiline Flags

In the multiline flag the pattern character "^" matches the first character of the string and the begining of the each line. While the small "w" is used to mark the space with characters.When you run the code first variable "q1" prints out the character "i" only and while using the Multiline flag will give the result of all first character of all strings.

import re

aa = """LiveAdmins13 Machine

Learning"""

q1 = re.findall(r"^\w", aa)

q2 = re.findall(r"^\w", aa, re.MULTILINE) print(q1)

print(q2)

In [15]:

['L']

['L', 'M', 'L']

Likewise, you can also use other Python flags like re.U (Unicode), re.L (Follow locale), re.X (Allow Comment), etc.

In [ ]:

Text normalization

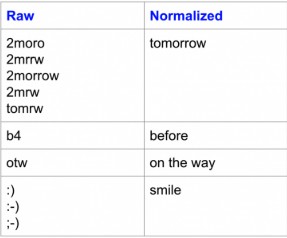

In the tect pre-processing highly overlooked step is text normalization. The text normalization means the process of transforming the text into the canonical(or standard) form. Like, "ok" and "k" can be transformed to "okay", its canonical form.And another example is mapping of near identical words such as "preprocessing", "pre-processing" and "pre processing" to just

"preprocessing".

Text normaliztion is too useful for noisy textssuch as social media comments, comment to blog posts, text messages, where abbreviations, misspellings, and the use out-of-vocabulary(oov) are prevalent.

Effects of normalization

Text normalization has even been effective for analyzing highly unstructured clinical texts where physicians take notes in non-standard ways. We have also found it useful for topic

extraction where near synonyms and spelling differences are common (like 'topic modelling',

'topic modeling', 'topic-modeling', 'topic-modelling').

Unlike stemming and lemmatization, there is not a standard way to normalize texts. It typically depends on the task. For e.g, the way you would normalize clinical texts would arguably be different from how you normalize text messages.

Some of the common approaches to text normalization include dictionary mappings, statistical machine translation (SMT) and spelling-correction based approaches.

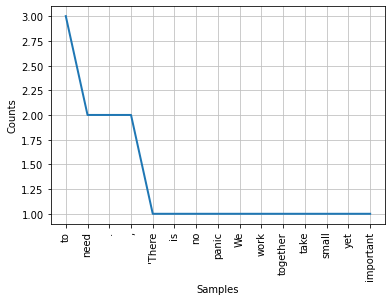

Word Count

I am assuming you have the understanding of tokenization,the first figure we can calculate is the word frequency.By word frequency we can find out how many times each tokens appear in the text. When talking about word frequency, we distinguished between types and tokens.Types are the distinct words in a corpus, whereas tokens are the words, including repeats. Let's see how this works in practice.

Let's take an example for better understanding:

“There is no need to panic. We need to work together, take small yet important measures to ensure self-protection,” the Prime Minister tweeted.

How many tokens and types are there in above sentences?

Let's use Python for calculating these figures. First, tokenize the sentence by using the tokenizer which uses the non-alphabetic characters as a separator.

from nltk.tokenize.regexp import WhitespaceTokenizer

m = "'There is no need to panic. We need to work together, take small

In [ ]:

Note in the above we had used a slightly different syntax for importing the module. You'll recognize by now the variable assignment.

tokens = WhitespaceTokenizer().tokenize(m) print(len(tokens))

In [ ]:

23

In [ ]:

Out[45]:

tokens

["'There", 'is',

'no',

'need',

'to',

'panic.',

'We',

'need',

'to',

'work',

'together,',

'take',

'small',

'yet', 'important', 'measures', 'to',

'ensure',

"self-protection,'", 'the',

'Prime', 'Minister', 'tweeted.']

my_vocab = set(tokens) print(len(tokens))

In [ ]:

23

In [ ]:

Out[47]:

my_vocab

{"'There", 'Minister', 'Prime',

'We',

'ensure', 'important', 'is', 'measures', 'need',

'no',

'panic.',

"self-protection,'", 'small',

'take',

'the',

'to',

'together,',

'tweeted.',

'work',

'yet'}

Now we are going to perform the same operation but with the different tokenizer.

|

my_st = "'There is no need to panic. We need to work together, take sm |

||

|

|

|

|

In [ ]:

We'll import different tokenizer:

from nltk.tokenize.regexp import WordPunctTokenizer

In [ ]:

Above tokenizer also split the words into tokens:

m_t = WordPunctTokenizer().tokenize(my_st)

print(len(m_t))

In [ ]:

30

In [ ]:

Out[51]:

m_t

["'",

'There',

'is',

'no',

'need',

'to',

'panic',

'.',

'We',

'need',

'to',

'work', 'together', ',',

'take',

'small',

'yet', 'important', 'measures', 'to',

'ensure',

'self',

'-',

'protection', ",'",

'the',

'Prime', 'Minister', 'tweeted', '.']

my_vocab = set(m_t) print(len(my_vocab))

In [ ]:

26

my_vocab

In [ ]:

Out[53]: {"'",

',',

",'",

'-',

'.',

'Minister', 'Prime',

'There',

'We',

'ensure', 'important', 'is', 'measures', 'need',

'no',

'panic', 'protection', 'self',

'small',

'take',

'the',

'to', 'together', 'tweeted', 'work',

'yet'}

What is the difference between the above approaches? In the first one, vocabulary ends up containing "words" and "words." as two distinct words; whereas in second example "words" is a token type and "." (i.e. the dot) is split into a separate token and this results into a new token type in addition to "words".

In [ ]:

Frequency distribution

What is Frequency distribution? This is basically counting words in your texts.To give a brief example of how it works,

|

#from nltk.book import * import nltk #nltk.download('gutenberg') print( "\n\n\n") text1 = "'There is no need to panic. We need to work together, take sm freqDist = nltk.FreqDist(word_tokenize(text1)) print(freqDist) |

||

|

|

|

|

In [ ]:

<FreqDist with 23 samples and 28 outcomes>

The class FreqDist works like a dictionary where keys are the words in the text and the values are count associated with that word. For example, if you want to see how many words "person" are in the text, you can type as:

print(freqDist["person"])

In [ ]:

0

One of the most important function in FreqDist is the .keys() function. Let us see what will it give in a below code.

words = freqDist.keys() print(type(words))

In [ ]:

<class 'dict_keys'>

After running above code, it'll give as class 'dict_keys', in the other words, you get a list of all the words in your text.

And you want to see how many words are there in the text,

print(len(words))

In [ ]:

29

In the class nltk.text.Text function do the same stuff, so what is the difference? The difference is that with FreqDist you can create your own texts without the necessity of converting your text to nltk.text.Text class.

And the other usual functon is plot. Plot will do like it displays the most used words in your text. So, if you want to see 15 most used words in the text , For example like:

In [ ]:

Personal Frequency Distribution

Suppose you want to do frequency distribution based on your own personal text. Let's get started,

|

from nltk import FreqDist sent = "'There is no need to panic. We need to work together, take sma text_list = sent.split( " ") freqDist = FreqDist(text_list) words = list(freqDist.keys()) print(freqDist['need']) |

||

|

|

|

|

2

In the first line, you don't have to import nltk.book to use the FreqDist class.

We then declare sent and text_list variables. The variable sent is your custom text and the variable text_list is a list that contains all the words of your custom text.You can see that we used sent.split(" ") to separate the words.

Then you have the variable freqDist and words. freqDist is an object of the FreqDist class is for the text you have given and words is the list of all keys of freqDist.

The last line of code is where you print your results. In this example, your code will print the count of the word “need”.

If you replace “need” with “Prime”, you can see that it will return 1 instead of 2. This is because nltk indexing is case-sensitive. To avoid this, you can use the .lower() function in the variable text.

In [ ]: